In many companies today, software already plays a decisive role: job applications are presorted, customer inquiries are prioritized, and risks are automatically assessed. The results appear objective, after all, they come from data!

But this is precisely where the real question begins: When is the use of such systems still ethically justifiable?

AI ethics refers to the rules and principles according to which AI systems are developed, used, and monitored. It deals with the (currently) ubiquitous crucial question: According to which criteria are machines allowed to make decisions about people, and what responsibility remains with humans? This becomes relevant wherever software is used to make decisions about people, such as in credit assessments or medical evaluations. AI does not make such decisions consciously, but rather recognizes patterns in training data and calculates statistical probabilities from them.

UNESCO describes AI ethics as guidelines designed to ensure that the development and use of AI remain compatible with human rights and transparency. The EU High-Level Expert Group also refers to “trustworthy AI.” Behind this are ethical principles for artificial intelligence: Systems should be used lawfully, function reliably from a technical standpoint, and respect social values. The principle of AI ethics thus primarily describes governance—i.e., who is allowed to use AI, under what conditions, and with what safeguards for humans.

Who monitors the monitors?

The situation becomes critical as soon as systems judge people. This raises an old question anew: Quis custodiet ipsos custodes? Who monitors the monitors when decisions are no longer made by humans?

Infobox:

Ethical use of AI means above all traceability: decisions must be explainable, verifiable, and contestable.

Bias in AI: Why ethical risks arise in the first place

AI often appears objective. Decisions seem to be free of bias, based on numbers, models, and statistics. But even a machine can make unfair decisions. This is where bias (systematic distortions in data or models) comes into play. The fact is that AI learns from past decisions and training data. However, these contain human biases, historical inequalities, or one-sided data sets. This may be correct for AI, but it can be unfair for those affected. The reality of this problem was demonstrated in 2015 by an image recognition system from Google Photos that incorrectly classified black people as “gorillas.” Another – more harmless – example can be seen in AI images: Analog clocks are strikingly often depicted at around 10:10. The reason is not a coincidence, but training material. In advertising and stock photography, clocks are presented in exactly this way, and the model learns this pattern.

Infobox:

AI can support decisions, but it cannot bear responsibility. That is why human control remains central to the ethics of autonomous systems.

AI ethics: Are employees allowed to use ChatGPT and similar tools?

In many companies, generative AI is already used in everyday life – often informally. Its practical benefits are undisputed: AI helps with formulating emails, summarizing texts, and conducting research. The real question is therefore no longer whether employees use such systems, but whether their use is regulated within the organization.

Prohibitions usually do not solve the problem, but rather shift usage to unofficial areas. Regulated use is practical: AI can be used sensibly for drafting, structuring, overviews, or research, but not, for example, for specific personnel decisions, confidential contracts, or unpublished key figures.

AI ethics in companies therefore primarily means organization: clear internal guidelines, trained employees, and defined review processes. Only with such rules can spontaneous use become a controllable and legally compliant work process.

The most common misconception: human control

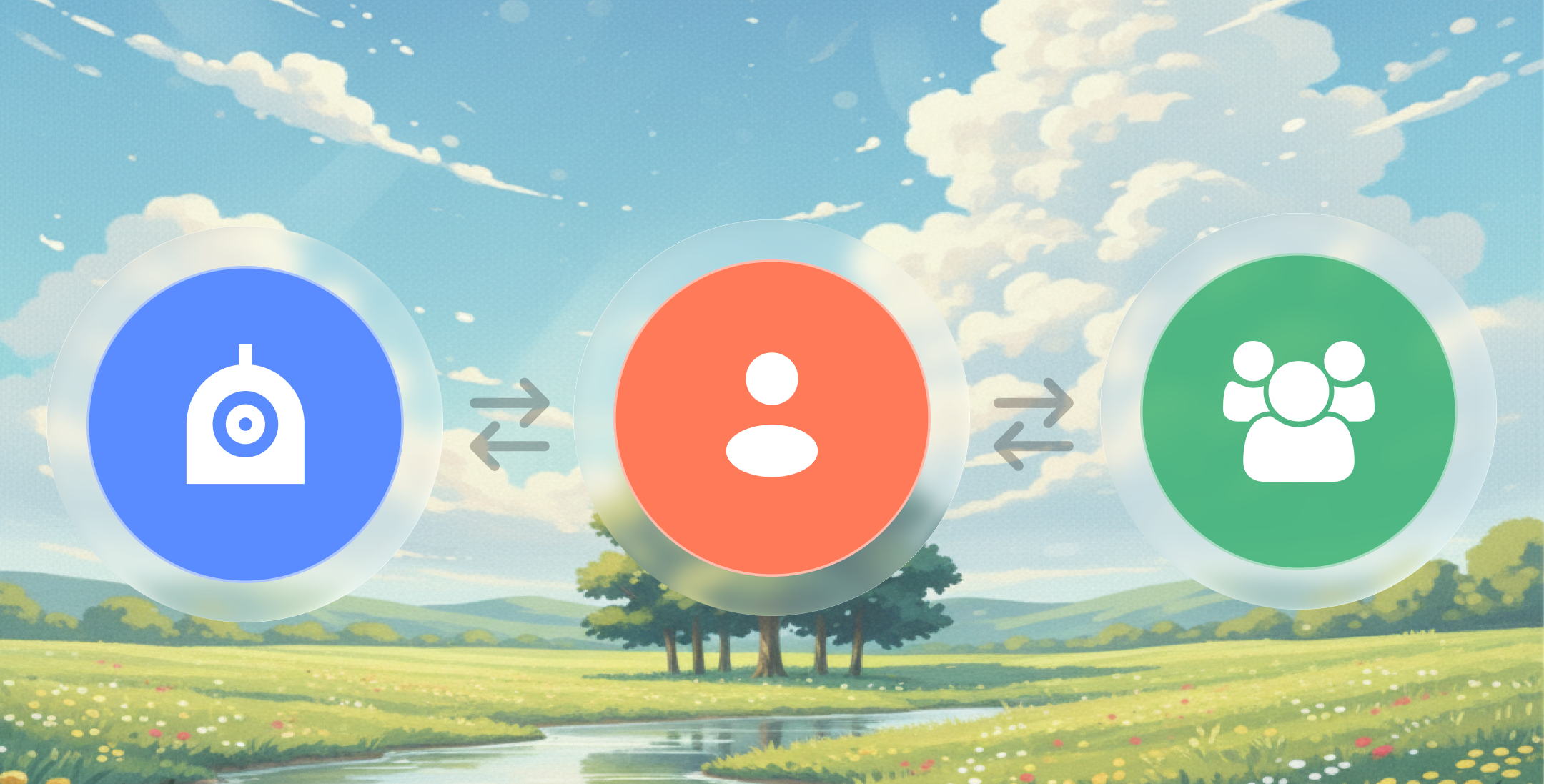

When talking about the risks of AI, the same reassuring answer almost always comes up: in the end, a human being still looks over it. That sounds reasonable. A system makes a suggestion, an employee checks it. Problem solved.

This principle is often described as human-in-the-loop: the machine analyzes, the human decides. In practice, however, something else happens. Those who only check a recommendation rarely make a completely new decision. The more complex a system appears and the faster it works, the more people orient themselves towards its suggestion. They may correct details, but they hardly question the direction. The responsibility for AI decisions does not automatically return to humans; it just becomes more difficult to grasp.

And this is where the real difficulty in controlling AI systems arises: the developer sees a tool, the employee sees a recommendation, and the organization sees an automated process. Everyone is involved, and that is precisely why, in the end, no one really feels responsible.

That is why AI governance is not a question of whether a human is involved, but when they must be involved. Ethical use means determining in advance which decisions a system is allowed to prepare, where a human must make their own assessment, and when results can be challenged.

AI ethics in practice

The discussion about AI ethics often seems abstract until you consider where systems are actually used. The same point applies in all areas: the ethically acceptable use of AI does not mean doing without systems, but organizing them in a comprehensible way.

Infobox:

The use of AI is considered ethically acceptable if it is clear how results are obtained, who checks them, and who can intervene in the event of an error.

In science, the use of AI helps to evaluate large amounts of data, simulate new materials, or recognize medical patterns. At the same time, a new risk arises: if researchers can no longer fully understand the results, verification becomes more difficult.

In education, the problem manifests itself differently. Systems can summarize texts, explain tasks, or provide individual feedback. But AI in education is also changing assessment and learning itself. Teachers must decide whether AI is just a tool or part of the performance. What is ethically relevant here is not the technology, but the question of how to distinguish between personal achievement and support.

In companies, finally, it is mostly about decisions: presorting applications, detecting fraud, prioritizing customer inquiries. This is where AI guidelines in companies become necessary, i.e., defined procedures for when results must be checked, who is responsible, and how errors are corrected. Such organizational rules are often referred to as a Responsible AI Framework: not an additional technical module, but a binding process for the responsible use of automated assessments. Without these specifications, decisions are still made, but without clearly assigned responsibility.

Conclusion: AI ethics is less a moral issue than an organizational task

AI ethics is less a fundamental moral issue than an organizational one. Many companies already use AI in processes such as job applications, customer inquiries, evaluations, and texts. The real risk arises not from the technology, but from a lack of rules: Who is allowed to accept results? When do they need to be checked? And who is responsible for errors?

The widespread idea that “a human will check it in the end” is not enough. Human control only works if it is determined in advance when it is necessary and what needs to be checked. AI ethics therefore means above all regulating its use organizationally: responsibilities, audit obligations, and documentable decisions.

Today, companies are not faced with the choice of whether to use AI. The crucial question is whether they control its use or whether decisions are already being automated without being consciously regulated.

FAQ: AI ethics

Can AI act morally?

No. AI has no consciousness, no intentions, and no sense of responsibility. It calculates statistical probabilities based on data and rules. Moral evaluation and responsibility always remain with the people and the organization using the system.

What are the problems with AI?

Typical risks include incorrect results, biased decisions, and a lack of traceability. There is also a danger that people will accept recommendations without checking them. AI therefore becomes particularly problematic when its use is not clearly regulated or monitored.

Are there certifications for ethical AI products?

There is currently no uniform seal of approval. Instead, standards and testing procedures are being developed, for example within the framework of the EU AI Act or by standardization organizations such as ISO. In practice, companies therefore primarily review processes, documentation, and control mechanisms, not just the individual system.

What impact does AI have on ethics?

AI shifts responsibility: decisions are prepared or influenced without any single person having a complete overview. This makes transparency, control options, and clear responsibilities more important than before. Ethics thus becomes a question of organization and rules, not just technology.

Is it wrong to say “please” to ChatGPT?

No. It is just meaningless to the machine. Politeness does not change the AI's response or behavior because it has no feelings. However, it can still be useful for humans because a respectful tone of voice structures their own communication and reduces misunderstandings.